True rasterdemo ("Raster Interrupts"-like) working on GeForce/Radeon! -- Comments?

category: general [glöplog]

[img]Hello.

I'm the founder of BlurBusters and TestUFO.

Other than that, you probably don't know me, but... back as a kid, I programmed some relatively unknown Commodore 64 hobby apps that used raster interrupts to create a scrolling zone. I once made a clone of Uridium completely in Supermon 64 (albiet with no sound, as I am a deafie) -- http://www.lemon64.com/forum/viewtopic.php?t=6241 ... I'm more well known in a very different circle of geeks for my display testing inventions as all the big websites use the tests that I have invented (TestUFO.com, pursuit camera, etc.) and am the world's first person to test a 480 Hz prototype monitor.

Now... I have a hobby side project I'd like to introduce.

Before I opensource -- anyone wanna work together in polishing this "Hobby Project" up for some kind of a demoscene submission? (Assembly 2018)

Here's some videos:

And get this (AFAIK) -- I think this is probably the world's first cross-platform true-raster-generated Kefrens Bars (Alcatraz Bars) on modern GPUs -- using NVIDIA / GeForce on both PC / Mac! It's true raster generated, not simulated -- using over 100 VSYNC OFF tearlines per refresh cycle!

Watch the video to the end; I intentionally glitch (and accidentally crash) by launching a Windows application in the background, which screws around the raster timings. (Timing errors simply manifests itself as tearline jitter, so the Kefrens Bars simply pixellate with increasingly worse timing errors)

All of these are impossible to screenshot since they are realtime raster generated graphics -- true raster-timed tearlines. The Kefrens Bars demo being over 7000 to 8000 VSYNC OFF tearlines per second on my GeForce GPU.

It compiles on both PC/Mac and works on both GeForces/Radeons. It also works (at a lower frameslice rate) on Intel GPUs and Android GPUs.

Thanks to a technique that distilled rasters to three minimum requirements:

(1) Existence of precision counters (e.g. RTDSC instruction)

(2) Existence of VSYNC timestamps

(3) Existence of tearlines (aka VSYNC OFF)

This made rasters cross-platform. With (1)+(2)+(3) it becomes unnecessary to poll a raster register or use APIs such as RasterStatus.ScanLine. The position of a tearline is a precision time-based offset from a VSYNC timestamp. Thusly, with some creativity, rasters now becomes cross-platform (works on D3D, OpenGL, Mantle, Metal, Direct2D, etc) as long as the minimum requirements are successfully met. Then it becomes possible to guess the raster-exact scanline position of a tearline to a very accurate margin. (...Any refresh rate. We even found a way to make it work during variable refresh rates too, in the Emulator thread... The raster accuracy is within 1-2% without knowing the signal horizontal scanrate, and within 0.1% accuracy if knowing the signal horizontal scanrate -- minimally on any platform, all one needs is to listen to the VSYNC timestamps / VSYNC events -- the heartbeat of the blanking intervals is all you need to know -- and then using a precision counter to guess the current raster relatively accurately without needing a raster register -- all modern systems have microsecond precision counters available.)

Some of you might have noticed the behaviour of tearlines being rasterlike (but probably have never seen this commandeered into realtime raster Kefrens Bars, I bet!)

I suspect this is the first time it was done in a truly cross-platform way, without a raster register since it can easily be extrapolated using a precision counter (RTDSC, etc).

All VSYNC OFF tearlines created in humankind are simply rasters.

With some best-practices I have learned over the last few months, and tearline-precision-improving tricks -- it works even in higher level languages like C#, you can see how the mouse pointer arrow is almost locked to the exact tearline even without a raster register needed at all! Watch the mouse dragging:

Raster number is simply 'guessed' as a precision clock offset from a VSYNC timestamp, and then tearlines are then thusly precisely timed by precision framebuffer present calls, with some best practices such as pre-Flush() before the precision raster sleep to improve Present() / glutSwapBuffers() raster precision.

It is now possible to get tearlines darn near scanline-exact on most platforms in the last 8 years (including Android GPUs too). You may only get maybe 4 tearlines per refresh cycle on an 8-year-old GPU, 10 tearlines per refresh cycle on a few-year-old high-end Android, and well over 100 tearlines per refresh cycle on a 1080/Titan/etc -- but either way, they can still be moved fairly raster-accurate.

I'll open source either way, but debating whether it should hit the demoscene before I opensource.

Currently, it would qualify under Assembly 2018's "Realtime Wild" rules. It has the graphics of the retro categories -- but runs on the GPU of the modern realtime category -- so it's kind of a hybrid that's difficult to slot into a demoscene category -- the commandeering of VSYNC OFF tearlines to generate realtime rasters.

Note: I also have a thread on Blur Busters Forums about The Tearline Jedi Demo.

Comments?

Cheers

Chief Blur Buster

I'm the founder of BlurBusters and TestUFO.

Other than that, you probably don't know me, but... back as a kid, I programmed some relatively unknown Commodore 64 hobby apps that used raster interrupts to create a scrolling zone. I once made a clone of Uridium completely in Supermon 64 (albiet with no sound, as I am a deafie) -- http://www.lemon64.com/forum/viewtopic.php?t=6241 ... I'm more well known in a very different circle of geeks for my display testing inventions as all the big websites use the tests that I have invented (TestUFO.com, pursuit camera, etc.) and am the world's first person to test a 480 Hz prototype monitor.

Now... I have a hobby side project I'd like to introduce.

- I've found a way to generate genuine rasters on GPUs (GeForces/Radeons). And in a cross-platform manner. And it works at multiple refresh rates: Videos of my Tearline Jedi Demo

- The authors of "Racing The Beam" book has retweeted my coverage about this Twitter Thread

- I have already helped some emulator authors implement beam racing (synchronization of emulator raster with real world raster for lagless operation), including WinUAE

Before I opensource -- anyone wanna work together in polishing this "Hobby Project" up for some kind of a demoscene submission? (Assembly 2018)

Here's some videos:

And get this (AFAIK) -- I think this is probably the world's first cross-platform true-raster-generated Kefrens Bars (Alcatraz Bars) on modern GPUs -- using NVIDIA / GeForce on both PC / Mac! It's true raster generated, not simulated -- using over 100 VSYNC OFF tearlines per refresh cycle!

Watch the video to the end; I intentionally glitch (and accidentally crash) by launching a Windows application in the background, which screws around the raster timings. (Timing errors simply manifests itself as tearline jitter, so the Kefrens Bars simply pixellate with increasingly worse timing errors)

All of these are impossible to screenshot since they are realtime raster generated graphics -- true raster-timed tearlines. The Kefrens Bars demo being over 7000 to 8000 VSYNC OFF tearlines per second on my GeForce GPU.

It compiles on both PC/Mac and works on both GeForces/Radeons. It also works (at a lower frameslice rate) on Intel GPUs and Android GPUs.

Thanks to a technique that distilled rasters to three minimum requirements:

(1) Existence of precision counters (e.g. RTDSC instruction)

(2) Existence of VSYNC timestamps

(3) Existence of tearlines (aka VSYNC OFF)

This made rasters cross-platform. With (1)+(2)+(3) it becomes unnecessary to poll a raster register or use APIs such as RasterStatus.ScanLine. The position of a tearline is a precision time-based offset from a VSYNC timestamp. Thusly, with some creativity, rasters now becomes cross-platform (works on D3D, OpenGL, Mantle, Metal, Direct2D, etc) as long as the minimum requirements are successfully met. Then it becomes possible to guess the raster-exact scanline position of a tearline to a very accurate margin. (...Any refresh rate. We even found a way to make it work during variable refresh rates too, in the Emulator thread... The raster accuracy is within 1-2% without knowing the signal horizontal scanrate, and within 0.1% accuracy if knowing the signal horizontal scanrate -- minimally on any platform, all one needs is to listen to the VSYNC timestamps / VSYNC events -- the heartbeat of the blanking intervals is all you need to know -- and then using a precision counter to guess the current raster relatively accurately without needing a raster register -- all modern systems have microsecond precision counters available.)

Some of you might have noticed the behaviour of tearlines being rasterlike (but probably have never seen this commandeered into realtime raster Kefrens Bars, I bet!)

I suspect this is the first time it was done in a truly cross-platform way, without a raster register since it can easily be extrapolated using a precision counter (RTDSC, etc).

All VSYNC OFF tearlines created in humankind are simply rasters.

With some best-practices I have learned over the last few months, and tearline-precision-improving tricks -- it works even in higher level languages like C#, you can see how the mouse pointer arrow is almost locked to the exact tearline even without a raster register needed at all! Watch the mouse dragging:

Raster number is simply 'guessed' as a precision clock offset from a VSYNC timestamp, and then tearlines are then thusly precisely timed by precision framebuffer present calls, with some best practices such as pre-Flush() before the precision raster sleep to improve Present() / glutSwapBuffers() raster precision.

It is now possible to get tearlines darn near scanline-exact on most platforms in the last 8 years (including Android GPUs too). You may only get maybe 4 tearlines per refresh cycle on an 8-year-old GPU, 10 tearlines per refresh cycle on a few-year-old high-end Android, and well over 100 tearlines per refresh cycle on a 1080/Titan/etc -- but either way, they can still be moved fairly raster-accurate.

I'll open source either way, but debating whether it should hit the demoscene before I opensource.

Currently, it would qualify under Assembly 2018's "Realtime Wild" rules. It has the graphics of the retro categories -- but runs on the GPU of the modern realtime category -- so it's kind of a hybrid that's difficult to slot into a demoscene category -- the commandeering of VSYNC OFF tearlines to generate realtime rasters.

Note: I also have a thread on Blur Busters Forums about The Tearline Jedi Demo.

Comments?

Cheers

Chief Blur Buster

...One typo in the lemon64 link. Fixed link: My little-known Uridium Clone from many years ago. Apologies! ;)

I'm just entertained that it turns out the people who have been saying for years that coding on modern hardware is not fun because you can't reach the bare metal apparently haven't been looking hard enough to find their old niche :)

amazing :)

FWIW, photodiode oscilloscope input lag tests show that there's only 3 milliseconds between the Direct3D API Present() call and the photons showing up on the screen.

Any part of the screen -- top edge, center, bottom edge -- all equally 3ms during beamraced frame slices.

At least on my line-buffered LCD gaming displays -- most modern gaming LCDs synchronize LCD refresh to the cable scanout, so they top-to-bottom refresh like a CRT, with a pixel-response fade lag -- like this high speed video (high speed video of a screen alternating between black screen & white screen).

Any part of the screen -- top edge, center, bottom edge -- all equally 3ms during beamraced frame slices.

At least on my line-buffered LCD gaming displays -- most modern gaming LCDs synchronize LCD refresh to the cable scanout, so they top-to-bottom refresh like a CRT, with a pixel-response fade lag -- like this high speed video (high speed video of a screen alternating between black screen & white screen).

Hilarious, absurd, and wonderful in equal measure. I love it.

Not bad.

I guess its not as easy as

Whatever you do with this cool thing. Good luck!

I guess its not as easy as

Code:

LDA #color

STA $d021.Whatever you do with this cool thing. Good luck!

looks cool, hope to see a polished demo out from it this summer :)

very awesome :) making it windowed will be even more hillarious :D (but DWM afaik triple buffers everything, eliminating tearlines)

BTW chiefblurbustera BIG thank you for all the projects you run, please keep them up!

that scene spirit <3 sta $0314

Super dope! I really hope to see a full production come out of this ^-^

hmm i love it that somebody's trying such stuff!

but regretfully the end result looks like shit, if "rasters" are not stable then what's the "point" anyway

but regretfully the end result looks like shit, if "rasters" are not stable then what's the "point" anyway

Neato.

Quote:

hmm i love it that somebody's trying such stuff!

but regretfully the end result looks like shit, if "rasters" are not stable then what's the "point" anyway

Not necessarily, since:

- This is a prototype

- Nothing stops you from doing fancy 3D graphics per frameslice

- Raster jitter can be useful as it invokes the raster artifacts of yesteryear

- For practical applications like emulators, there are ways to hide raster jitter via the jittermargin techniques (duplicate frames where the tearline is; so no tearline appears)

Four emulator authors are currently collaborating in the Blur Busters Forums, and two emulators has already implemented this, including the WinUAE emulator (WinUAE author's announcement -- sync emuraster with realraster!!)

+Shoutout to Calamity!!! Also want to add props to Calamity of GroovyMAME who also concurrently invented frameslice beamracing simultaneously (unbeknownst to me). Calamity is now participating in the emulator discussion thread.

For emulators -- in practicality, it's possible to still get benefits with just 1, 2, 3, or 4 frameslices per refresh cycles. 1 frameslice is simply racing the VBI. 2 frameslice is simply the top and bottom halves. And then so on. Can be subdivided coarsely or finely.

120 frameslices per 240p refresh cycle is 2 pixels tall. Rasterplotting on previous emu refresh cycle can give a much bigger jittermargin of one full refresh cycle minus one frameslice.

So beamraced frameslices can improve emulators on legacy devices (e.g. Androids, Raspberry Pi) because CPUs no longer need to surge-execute, and can just emulate accurately (Higan-style) in realtime while beamracing the frameslices on the fly.

On the other hand, at the other extreme (high performance) 8000 frameslices per second, gets very close to NTSC scanrate (15KHz). So it's now possible to have a frameslice beamrace margin of just 4 scanlines (2 frameslices of 2 scanlines each -- 120 frameslices per 240p refresh cycles).

Either way, it provides more nostagilia accuracy and more forgiving CPU than RunAhead approaches (RetroArch) which is also amazing too, but is simply another lag-reducing tool in the input-lag-tricks sandbox.

That said, beamracing also works at any refresh rate (60Hz, 120Hz, 144Hz, 240Hz) and even on GSYNC/FreeSync. (First, the refresh cycle must be manually triggered, then you beamrace that refresh cycle). WinUAE supports beamracing on GSYNC and FreeSync displays too -- I helped Toni make that work! There's tons of best-practices we've come up to achieve universal beamracing.

Displays are still transmitted top-to-bottom whether it's a 1930s analog TV broadcast or a 2020s DisplayPort cable, so beamracing is still with us today. Even micropacketization simply bunches the scanlines together (e.g. 2 scanlines or 4 scanline groups). The serialization of 2D imagery over a 1D cable enforces that, and we've stuck to top-to-bottom scan on default screen orientation -- even on mobile screens (which are also beamraceable too).

120 frameslices per 240p refresh cycle is 2 pixels tall. Rasterplotting on previous emu refresh cycle can give a much bigger jittermargin of one full refresh cycle minus one frameslice.

So beamraced frameslices can improve emulators on legacy devices (e.g. Androids, Raspberry Pi) because CPUs no longer need to surge-execute, and can just emulate accurately (Higan-style) in realtime while beamracing the frameslices on the fly.

On the other hand, at the other extreme (high performance) 8000 frameslices per second, gets very close to NTSC scanrate (15KHz). So it's now possible to have a frameslice beamrace margin of just 4 scanlines (2 frameslices of 2 scanlines each -- 120 frameslices per 240p refresh cycles).

Either way, it provides more nostagilia accuracy and more forgiving CPU than RunAhead approaches (RetroArch) which is also amazing too, but is simply another lag-reducing tool in the input-lag-tricks sandbox.

That said, beamracing also works at any refresh rate (60Hz, 120Hz, 144Hz, 240Hz) and even on GSYNC/FreeSync. (First, the refresh cycle must be manually triggered, then you beamrace that refresh cycle). WinUAE supports beamracing on GSYNC and FreeSync displays too -- I helped Toni make that work! There's tons of best-practices we've come up to achieve universal beamracing.

Displays are still transmitted top-to-bottom whether it's a 1930s analog TV broadcast or a 2020s DisplayPort cable, so beamracing is still with us today. Even micropacketization simply bunches the scanlines together (e.g. 2 scanlines or 4 scanline groups). The serialization of 2D imagery over a 1D cable enforces that, and we've stuck to top-to-bottom scan on default screen orientation -- even on mobile screens (which are also beamraceable too).

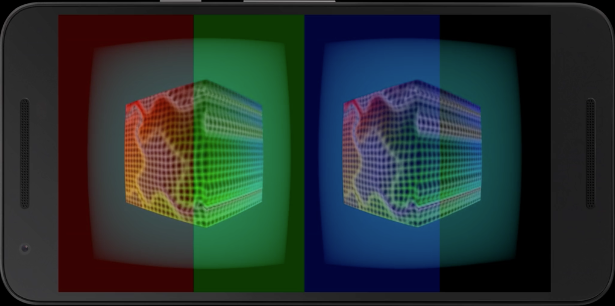

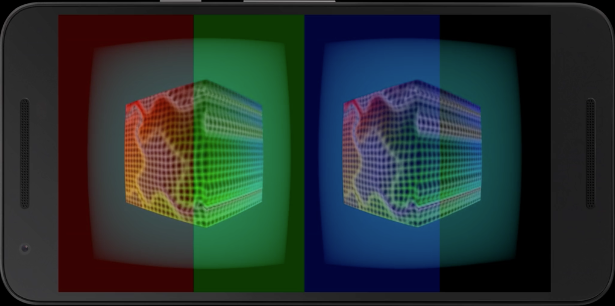

And for virtual reality, the jittermargin technique can also be done for 3D graphics too!

Usually for virtual reality, the screen is subdivided into 4.

The great thing is that rotated displays are scanning left-to-right or right-to-left.

So you can render the left eye while displaying the right eye. Or vice versa.

This is already being done in some VR applications for Android for Google Cardboard latency reductions in certain GearVR apps.

Usually for virtual reality, the screen is subdivided into 4.

The great thing is that rotated displays are scanning left-to-right or right-to-left.

So you can render the left eye while displaying the right eye. Or vice versa.

This is already being done in some VR applications for Android for Google Cardboard latency reductions in certain GearVR apps.

At the end of the day:

I still need co-credit/help/volunteer to fancy-up Tearline Jedi Demo if I want to submit to a demoscene event. A 3D programmer with prior raster experience is ideal -- to do a more proper mix between nostagilia and modern. Work on my private git repo that will be open sourced after a demoscene submission...

Any takers?

Cheers.

I still need co-credit/help/volunteer to fancy-up Tearline Jedi Demo if I want to submit to a demoscene event. A 3D programmer with prior raster experience is ideal -- to do a more proper mix between nostagilia and modern. Work on my private git repo that will be open sourced after a demoscene submission...

Any takers?

Cheers.

So did I get this right, the point with this frame slicing thing is to reduce the latency between the emulator and "real world", from 1 frame to a fraction of a frame? (Of course even without frame slicing the minimum latency can be smaller if up to 1 frame of latency jitter is allowed, but I like to think that it's best to keep latencies constant)

Quote:

So did I get this right, the point with this frame slicing thing is to reduce the latency between the emulator and "real world", from 1 frame to a fraction of a frame? (Of course even without frame slicing the minimum latency can be smaller if up to 1 frame of latency jitter is allowed, but I like to think that it's best to keep latencies constant)

Yep. Consistent subframe latency.

The input lag is about 2 frame slices if you want a tight jitter margin. So 8000 frameslices per second means 2/8000sec of input lag compared to the original machine.

The WinUAE lagless vsync (beam raced frameslicing) worked on a laptop with an old Intel 4000 series GPU (sufficient performance for 6 frameslices per refresh cycle).

Now has consistent instantaneous subframe latency nearly identical to an original Amiga. Even for mid-screen input reads (e.g. to control bottom-of-screen pinball flippers) -- some pinball games are amazingly responsive now.

Theoretically frame slices can be as tiny as 1 pixel tall (1 scanline) but would require a GPU capable of 15,625 tearlines per second (NTSC scanrate!). So theoretically you can reduce lag to almost just 1 scanline!

My GPU can only reach half that -- 8000 tearlines per second -- but that's still incredible tight beam racing margin now theoretically possible.

WinUAE's frameslice count per refresh cycle is configurable, so you can adjust your beamracing margin for an older or newer GPU. It also even works with the CRT emulated fuzzyline shaders (HLSL style) albiet at a lower frame slice rate.

NOTE: Some monitors are buffered and laggy, especially televisions. However, most gaming monitors are unbuffered (well, line-buffered processing).

However, beamracing still reduces input lag between API call and the pixels hitting the video cable. Whatever the display does next is beyond our control, except we can just shop for displays that have the lowest input lag (if we were buying a new display, anyway).

The ones that have DisplayLag.com ~"10ms" input lag is simply screen center input lag (1/2 of 1/60sec to scanout = 8.3ms plus some partial LCD GtG time = about 10-11ms) since the stopwatch begins at VBI and stopwatch stops at screen center.

So DisplayLag.com "10ms" displays actually only have 2ms-3ms with beam raced frameslices.

However, beamracing still reduces input lag between API call and the pixels hitting the video cable. Whatever the display does next is beyond our control, except we can just shop for displays that have the lowest input lag (if we were buying a new display, anyway).

The ones that have DisplayLag.com ~"10ms" input lag is simply screen center input lag (1/2 of 1/60sec to scanout = 8.3ms plus some partial LCD GtG time = about 10-11ms) since the stopwatch begins at VBI and stopwatch stops at screen center.

So DisplayLag.com "10ms" displays actually only have 2ms-3ms with beam raced frameslices.

Quote:

I'm just entertained that it turns out the people who have been saying for years that coding on modern hardware is not fun because you can't reach the bare metal apparently haven't been looking hard enough to find their old niche :)

<3

chiefblurbuster: for latency reduction in emulators (etc) this is an awesome thing, no doubt. the whole process that you describe reminds me more of screen border breaking than of rasterbars, but maybe that's just me. in any case, thanks for the huge effort in researching this :)

Awesome! :)